The New Math

/https://static.texastribune.org/media/images/TxTrib-RiggedResults005.png)

Friday’s news conference on the release of school performance ratings featured a bizarre dynamic in the annals of education PR: the higher the ratings, the more Education Commissioner Robert Scott and a half-dozen assembled district officials worried about taking a beating in the media.

That’s because the Texas Projection Measure, which credits schools for students who fail state tests but are projected to pass in the future, has sent the ratings soaring in the past two years — to the point where many distrust them. Playing defense, Scott and the district officials rattled off a shotgun blast of related and unrelated school data, some relevant and some not. More than one speaker admonished that the measure was “about the children.” (Find audio clips of Scott’s presentation and his Q&A with reporters here.)

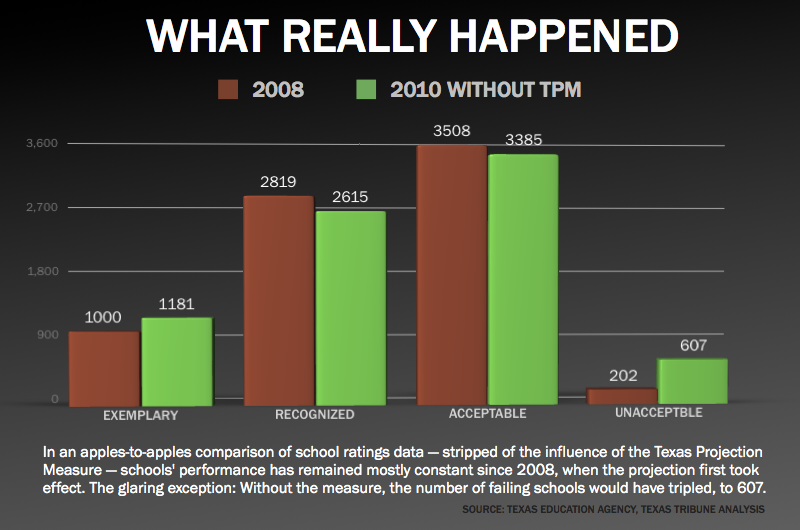

The state now calls three-fourths of Texas schools “exemplary” or “recognized,” up from fewer than half of campuses two years ago. But a Texas Tribune analysis of data that the Texas Education Agency did not highlight on Friday shows that Texas public schools — when decoupled from the controversial projection measure — have not served children much better since 2008, the year before the new accountability formula took effect. In fact, schools may have regressed overall or, at best, stagnated, even as thousands of educators have basked in new and distinguished-sounding performance labels.

Indeed, the primary real-world effect of the measure has been to mask what would have been a huge increase in the number of “unacceptable" schools. The projection prevented a tripling in the number of failing schools in just two years, from 202 to 607, according to the Tribune analysis. Instead, the number of “unacceptable” schools declined to 125, which amounts to just 1.6 percent of all Texas schools this year.

Without the projection measure, Friday’s news conference actually would have been much rougher on Scott and his district colleagues than it already was. Absent the projections, the number of "recognized" and "acceptable" schools would have declined by 204 and 123, respectively, in the last two years. The news would not have been all bad: The number of “exemplary” schools did legitimately increase from 1,000 to 1,181, a healthy increase of 18 percent, without help from the projection. But that’s hardly the jump-for-joy kind of gains the state sheepishly announced Friday: an increase of 162 percent in the number of "exemplary" schools in just two years, from 1,000 to 2,624.

Past results, future performance

Though the TEA calls the Texas Projection Measure a “growth measure,” it does not measure growth. Rather, it uses regression analysis to predict future growth based on a static score, TEA officials have said. Before the measure was implemented statewide, the TEA tested the accuracy of the projection, running the data on all Texas student scores from the 2007-2008 school year to the 2008-2009 school year.

Did it predict scores accurately? Not really. Projections calling for failing students to pass the next year were wrong between 19 and 48 percent of the time, depending on the grade and subject, according to data recently released at the urging of state Rep. Scott Hochberg, D-Houston, the vice chairman of the House Public Education Committee.

In response to the criticism about projection accuracy, the TEA on Friday presented accuracy data for the projections made between 2008-2009 and 2009-2010. The projections proved more on target this time, which state officials have attributed to a tweaking of the formula: The predications now are based on two years of student data rather than one. Another key factor that could boost accuracy of the results: Educators this year likely paid more attention to students on the borderline of being counted as passing by the projection measure, the easiest way to boost a school's rating. Still, error rates for students who failed but were predicted to pass — the only ones that matter to school ratings — ranged from 9 percent in 10th grade science to 29 percent in 10th grade English, according to TEA data. Those figures represent the accuracy of projections used to boost school ratings for the last school year. The accuracy of projections used in the annual ratings released on Friday won’t be known until subsequent years.

“Acceptable” failure

The boost in high-performance labeling troubles many critics — about a third of Texas schools are now “exemplary,” compared with 13 percent two years ago — but that increase may have less practical impact than the formula’s effective elimination of failing status for hundreds of campuses. The effect of sparing a school from the dreaded "unacceptable" label goes far beyond sparing schools and education officials from public embarrassment. The whole point of accountability systems is to spotlight failure in order to force local schools and districts to own up to their lack of performance and to take concrete action toward reform. That will no longer happen for students at the 482 schools that the Texas Projection Measure saved from the "unacceptable" rating this year. Those schools — where between 30 and 50 percent of students fail state tests, depending on the subject — are now lumped into the amorphous category of “acceptable.” (Meanwhile, thousands of formerly “acceptable” schools are now “recognized,” and the formerly “recognized” are now “exemplary.”)

The “unacceptable” label, though highly controversial among educators, nonetheless has the power to spur frantic action at failing schools. Though such action does not guarantee success, district administrators and school faculties at state-designated failing campuses typically will work themselves to the bone to avoid the ultimate sanctions: school closures, forced staff transfers or overhauls of the academic program. Both state and federal mandates require such radical remaking of failed schools if the failure persists over several years. The Obama administration has recently poured $5 billion into turnaround projects for failing schools, at least $390 million of which could go to Texas. Among the turnaround methods required to get federal money — up to $2 million per school — are closing the school, handing over management to a charter operator, or transferring or firing at least half the staff, starting with the principal.

Such reform projects, though often threatened by state officials in Texas and elsewhere, remain fairly rare in practice, at least so far. Data on their effectiveness are far from definitive. But the results of maintaining the status quo — as will happen at hundreds of no longer "unacceptable" schools — are comparatively definitive: The same staff and program will likely achieve the same results.

“Patted on the back”

Scott, an attorney whose background is in law and politics rather than education, conducted Friday’s data release as if he was presenting to a grand jury: He employed a flurry of statistical arguments, laid out in charts and graphs, and called six witnesses from local school systems to indict media critics and defend the Texas Projection Measure.

Gail Stinson, the superintendent of Lake Dallas ISD, argued that the ratings helped students who failed and their teachers nonetheless feel good about progress. “For them to be patted on the back and recognized for those gains — in our case, double-digit gains [in science] — is what we want,” Stinson said. “We all need to remember this is about the kids and continuing improvement for students.”

Student improvement may well have jumped in Lake Dallas. But with the measure, it did not improve much, if at all, on a statewide basis, according to the Tribune analysis of the ratings data.

Though the criticism has exclusively targeted the formula’s use in accountability ratings, most of the comments from local officials centered on the utility of the projection measure in classrooms. The data can help educators spotlight students who need help and soothe those who fail but still get close, the educators said. Buck Gilcrease, the superintendent of Hillsboro ISD, relayed a tale of how, after a pep rally celebrating one school’s newly boosted ranking in the school’s gym, many students eagerly came by the school office asking, "Did I pass?"

Some of them had failed, said Gilcrease (who did not name the school). “But we could sit down with them and show them the TPM and say, ‘Look, you did help the rating for our school, and if you continue with the amazing growth process you are on, you will be part of the success of this school.’”

What went unsaid at the news conference was the fact that using projection data to diagnose or counsel students has nothing whatsoever to do with using it to inflate school ratings. If the state revoked two years of artificially boosted ratings data tomorrow, teachers could still use individual student projection data in the same ways they always have. Moreover, the question of whether the measure has statistical validity in a classroom setting has nothing to do with the question of statistical validity of using the formula to, for instance, more than double the number of “exemplary” schools overnight with little improvement in real performance.

In Texas accountability, as in horseshoes and hand grenades, close enough counts. Separately, the way the state credits almost-passers in school ratings has nearly eliminated “failure” from the educational lexicon, along with its attendant consequences — and lessons.

Texas Tribune donors or members may be quoted or mentioned in our stories, or may be the subject of them. For a complete list of contributors, click here.

Information about the authors

Learn about The Texas Tribune’s policies, including our partnership with The Trust Project to increase transparency in news.

/https://static.texastribune.org/media/profiles/tt_bio_thevenot_brian.jpg)